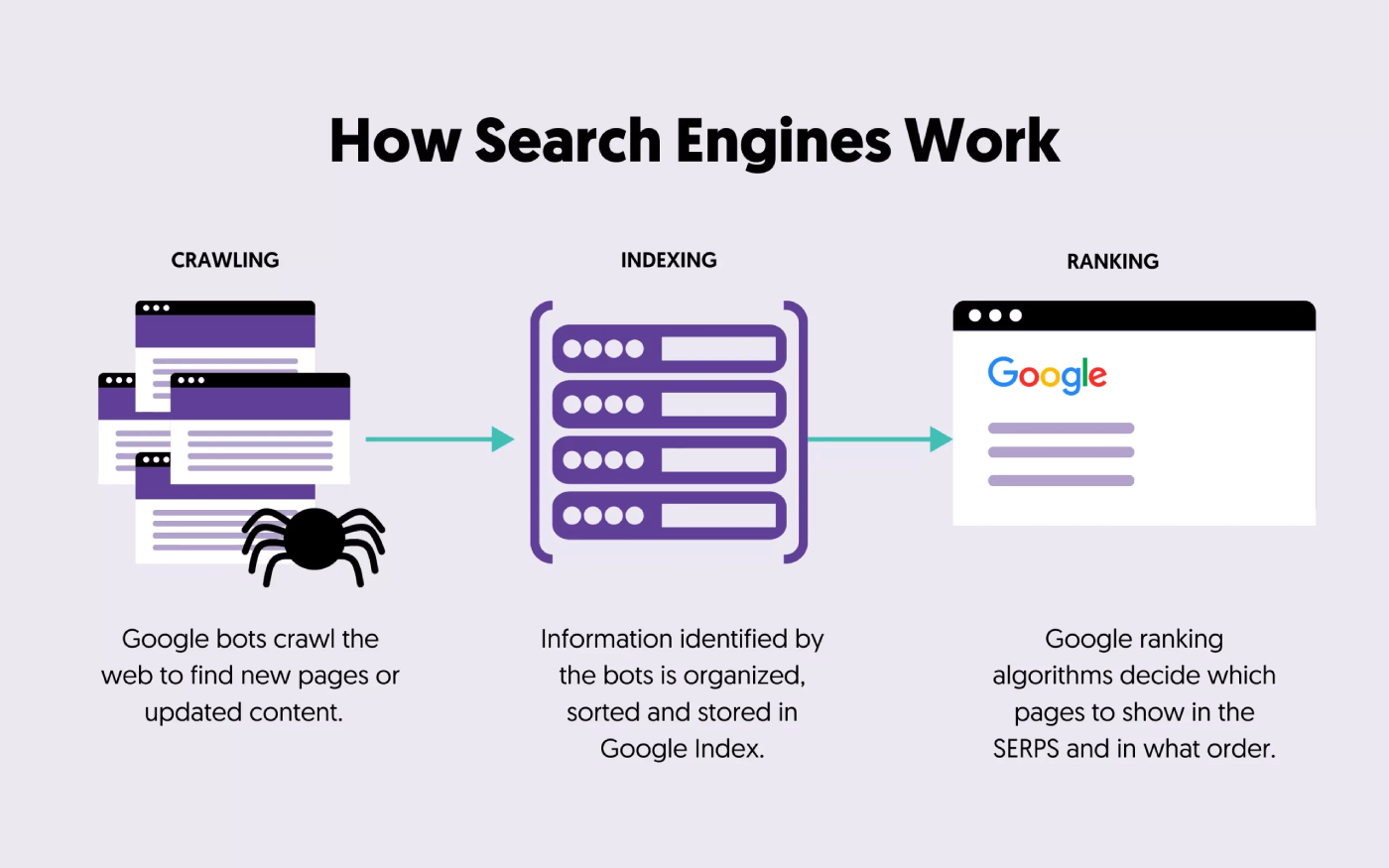

Crawl depth influences how efficiently Google can index your content.

Googlebot has limited time and server resources. Therefore, the crawl budget, or the number of pages Googlebot can crawl on your site during a specific time frame, is finite.

Crawl depth also impacts user experience. Visitors may struggle to access the necessary information if essential content is hard to find because of complex navigation or excessive depth.

In this article, I will explain what crawl depth means and why it's important in SEO.

Plus, I will share some tips to help you improve your website's crawl depth for better search engine performance.

What is Crawl Depth in SEO?

Crawl depth refers to the level or distance a web page is located within a website's hierarchy, as measured from the homepage.

It represents how many clicks or steps a search engine's web crawler takes to reach a particular page from the website's homepage. Crawl depth is important because it can influence how effectively search engines discover and index your website's content.

Imagine you have a website with the following structure:

Homepage > Category A > Subcategory A1 > Page 1

The homepage has a crawl depth of 0 in this example because it's the starting point. Subcategory A1 has a crawl depth of 2 because it's two clicks from the homepage, and Page 1 has a crawl depth of 3 because it is three clicks away from the homepage.

Why is Crawl Depth Important in SEO?

Crawl depth matters in SEO for several reasons:

- Indexing Efficiency: Pages buried deep within a website's structure may not be crawled and indexed as frequently as those closer to the homepage.

- Link Equity: Pages closer to the homepage often receive more link equity (authority) through internal and external links. This can affect their search engine rankings and visibility.

- User Experience: A complex and deep website structure can make finding important content challenging for users and search engines, potentially leading to a poor user experience.

- Freshness: Pages with a shallow crawl depth are more likely to be crawled frequently, allowing search engines to detect and index updates and changes more promptly.

How to Increase Crawl Depth Efficiency

Here are some of the best ways to improve crawl depth efficiency:

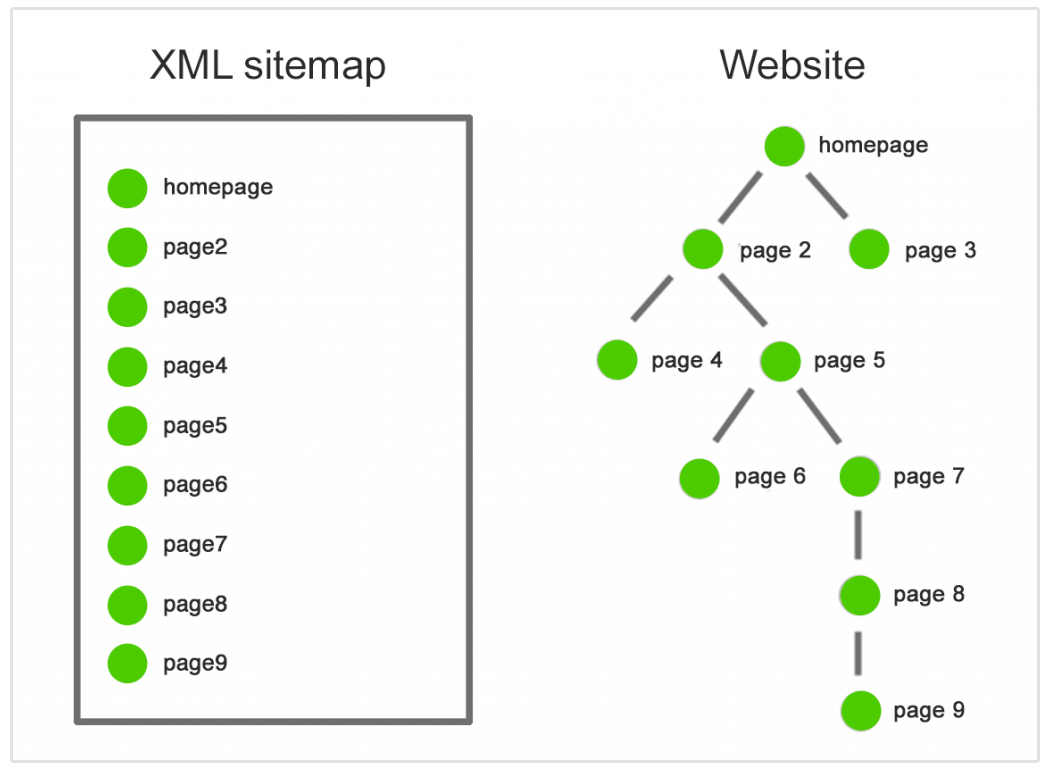

Regularly Update Sitemap

By submitting your sitemap, you make it simpler for search engines to crawl and index your web pages promptly.

It's crucial to emphasize that sitemaps should remain dynamic, always reflecting the most up-to-date status of your website.

Remember to update your sitemap accordingly as you add new pages, update existing ones, or remove outdated content. This ensures that search engines always have access to an accurate and comprehensive list of your website's URLs.

Improve Website Navigation and Structure

Improving your website's navigation and structure is fundamental to web design and SEO.

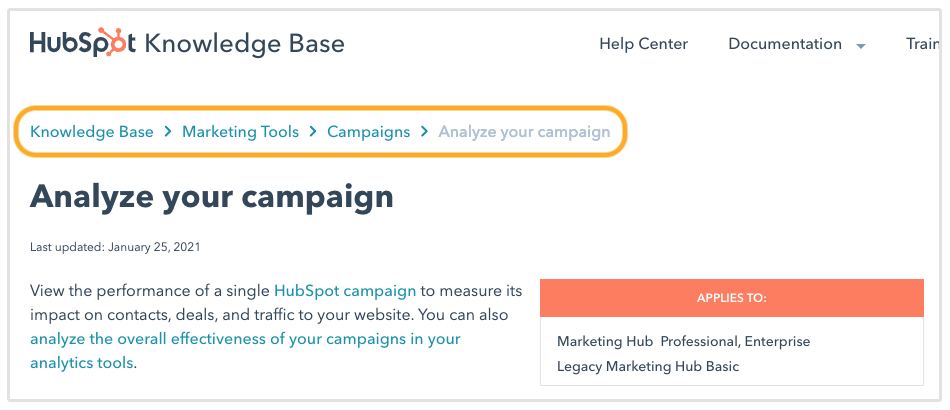

Start by streamlining your navigation menus, categorizing content logically, and using clear, descriptive labels. Create a clear hierarchy for your website, ensuring that subcategories and pages are organized beneath relevant parent categories.

Implement breadcrumb navigation to guide users and search engines through your site's structure.

Finally, optimize your URL structure to create clean and descriptive URLs and maintain an up-to-date XML sitemap to aid search engine indexing.

Add More Internal Links

Internal links are important in guiding search engine crawlers through your website.

When you add internal links strategically within your content, you provide clear pathways for search engine bots to follow from one page to another.

Adding internal links to various pages across your site allows you to distribute the crawling activity more evenly. This ensures that different parts of your website receive attention from search engine bots, preventing some pages from being overlooked.

Besides, internal links encourage search engine bots to delve deeper into your site's hierarchy. When important pages are linked from multiple sources, it signals to search engines that they are significant and deserve thorough indexing.

Improve Website Performance

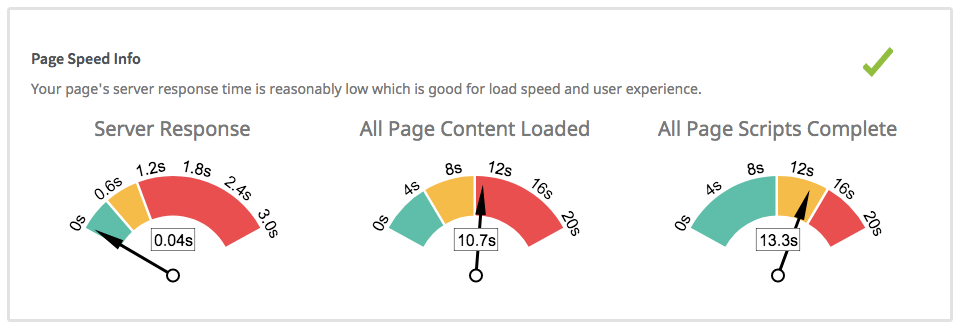

Improving website performance directly impacts crawl rate.

A fast-loading website with optimized images, minimal HTTP requests, efficient server response times, and reduced server load can significantly enhance the efficiency of search engine crawlers.

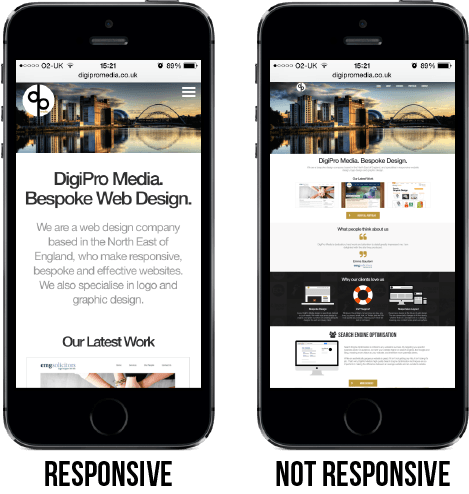

When a website is responsive and loads quickly, search engines can crawl its pages more rapidly and efficiently. This means that search engine bots can cover more ground within the allocated crawl budget, leading to more comprehensive indexing of your site's content.

Luckily most website builders and modern Content Management Systems have mobile-responsive themes.

Consequently, a well-performing website provides an improved user experience and facilitates faster and more thorough search engine indexing, which can positively influence your SEO rankings.

What can Cause Crawl Depth Issues?

Here are common factors that can lead to crawl depth problems:

- Complex Website Structure: Websites with overly complex hierarchies and deep navigational paths can make it challenging for search engine bots to reach deeper pages quickly. This complexity may result from excessive subcategories or inefficient organization.

- Insufficient Internal Linking: If your website lacks proper internal linking, particularly links pointing to deeper pages, search engine bots may struggle to discover and crawl those pages. Insufficient internal links limit the pathways for crawlers to follow.

- Orphaned Pages: Orphaned pages are those without any internal links pointing to them. When pages are isolated from the rest of the website's structure, search engines may not easily find and index them.

- Broken Links and Redirect Chains: Broken links or improper redirects can disrupt crawling. Search engine bots may encounter dead ends or circular redirect chains, preventing them from accessing deeper pages.

- Slow Page Load Times: Pages with slow load times can frustrate search engine bots, causing them to abandon the crawl prematurely. Slow-loading pages can hinder the indexing of deeper content.

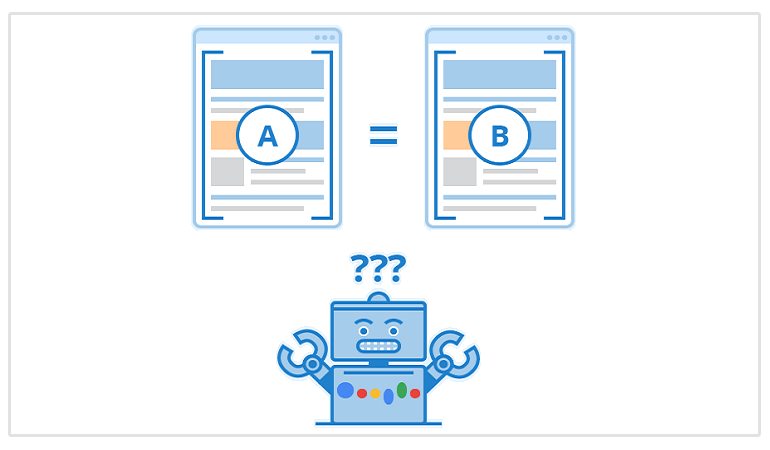

- Duplicate Content: Duplicate content issues can confuse search engine bots, leading to inefficient crawling. When bots encounter multiple versions of the same content, they may not prioritize indexing all instances.

- Noindex Tags: The "noindex" meta tag or directive instructs search engines to refrain from indexing particular pages. When essential pages unintentionally have this tag, it can diminish their presence in search results, potentially affecting their visibility.

- Changes in Website Structure: Frequent changes to your website's structure without proper redirection or updating of internal links can confuse search engine bots. They may attempt to crawl non-existent or outdated pages.

Conclusion

Crawl depth is vital in how search engines discover and index your website's content. It impacts indexing efficiency, SEO performance, user experience, and how frequently Googlebot revisits your pages.

By optimizing your website's structure, internal linking, and other technical aspects, you can ensure that important content receives the attention it deserves from search engines.